Too low bitrate when recording or is this normal?

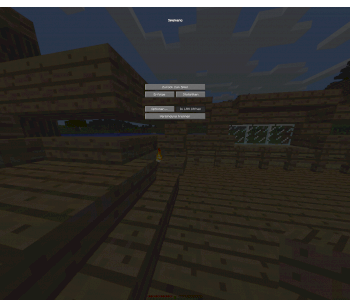

Am Minecraft Let's player and actually my recordings are always very sharp. Sometimes, however, and I think I've found out that this problem mainly occurs when there's a lot of green around me (like a normal Minecraft world with lots of grass blocks), sometimes my video gets "blurry", It looks like it looks in streams when the bitrate is too low. I currently record at a 60,000 bitrate. Was recommended to me or I have already been recommended 30,000. In the recording program, I can go up to 9,999,999, but packs my PC or is it even at the bitrate?

Have an Intel Core i7 8700, a NVIDIA GeForce GTX 1080 and 16 GB of RAM.

Or is it normal that you just can't record so high resolution, so that everything looks razor sharp? Because even with some big Let's players, I often see blurry spots.

Sorry for my little problems. Xd But I'm going to start with YouTube and there should just be as perfect as possible for my viewers.

60k bitrate? Um, you've got something wrong. For 1080p you should take 6000 bitrate and the x264 preset on medium, then it looks good, try it.

Because even with some big Let's players, I often see blurry spots.

With your hardware you should have absolutely no problems 1080p extremely sharp to stream, the other then have wrong settings.

Sorry, maybe I was wrong. I mean, the picture looks pretty much like streaming too low a bitrate. But in my case it's about recording. ^^

Do you take on the CPU or GPU, the GPU inevitably has always poorer quality.

This tells YouTube about your problem: https://support.google.com/...2171?hl=de

Okay, thanks, so it may be because I record too high resolution?

And I do not quite understand that:

Do I make SDR or HDR uploads?

Does 12 MBit mean a bitrate of 12,000?

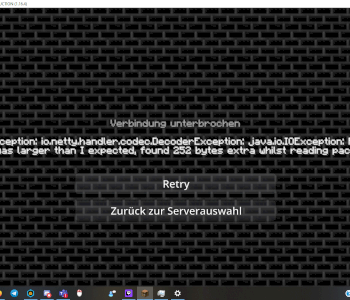

Do you have the problem only on Youtube or are the videos synonymous on the PC out of focus? If that only occurs on Youtube then you can't do anything about it, because youtube compresses everything so hard for small Youtubers that the quality is always bad.

If this also happens on the PC, then select another codec in the recording program. Take the h.264 codec.

https://de.wikipedia.org/...amic_Range

Video (HDR video) offers a bigger one

https://de.wikipedia.org/...fang#Optik and color gamut as

https://de.wikipedia.org/...amic_Range video (SDR).

https://de.wikipedia.org/...ange_Video" class="text-primary">https://de.wikipedia.org/...ange_Video # cite_note displayHDR2015BBC-1

https://de.wikipedia.org/wiki/High_Dynamic_Range_Video

The

means Mbps.

MBit / s is called "megabits per second" and describes how many megabits your device or your connection processes per second… Therefore: Megabit = 1.000.000 Bit.

Okay, but would that mean that I need a bitrate of 9,999,999, or how now? Do not check it.

Of course not!

Here's how much bitrate you need: https://support.google.com/...2171?hl=de

If you do not check, I can't help you.

Since the bitrate is only in Mbps. What should one register now at bitrate?